OpenSearch Vector Engine

To power artificial intelligence (AI) apps at scale, you need a database specially designed for the way AI understands data. OpenSearch Vector Engine is designed for accuracy, speed, and scalability, enabling you to build stable AI applications on a proven platform that scales to tens of billions of vectors, with low latency and high availability built-in. Unlike traditional databases that struggle with unstructured information, vector databases are designed for high-dimensional data—delivering lightning-fast results for chatbots, recommendations, image search, and other AI use cases.

OpenSearch Vector Engine for vector data

OpenSearch Vector Engine brings together the power of traditional search, analytics, and vector search in one complete package. With OpenSearch vector databases, organizations can accelerate AI development by reducing the effort for builders to operationalize, manage, and integrate AI-generated assets.

Bring your models, vectors, and metadata into OpenSearch to power vector, lexical, and hybrid search and analytics—with performance and scalability built in.

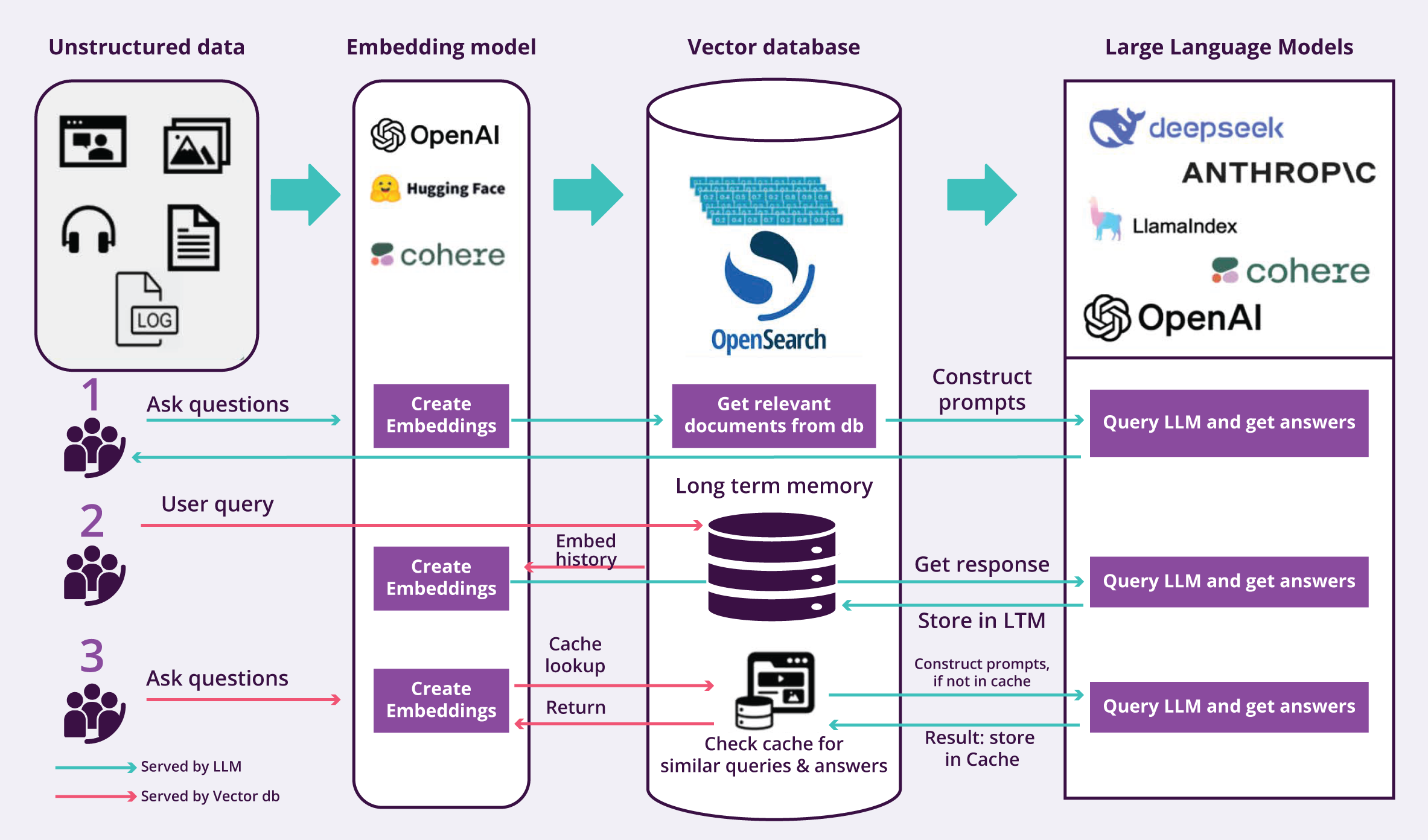

What is a vector database?

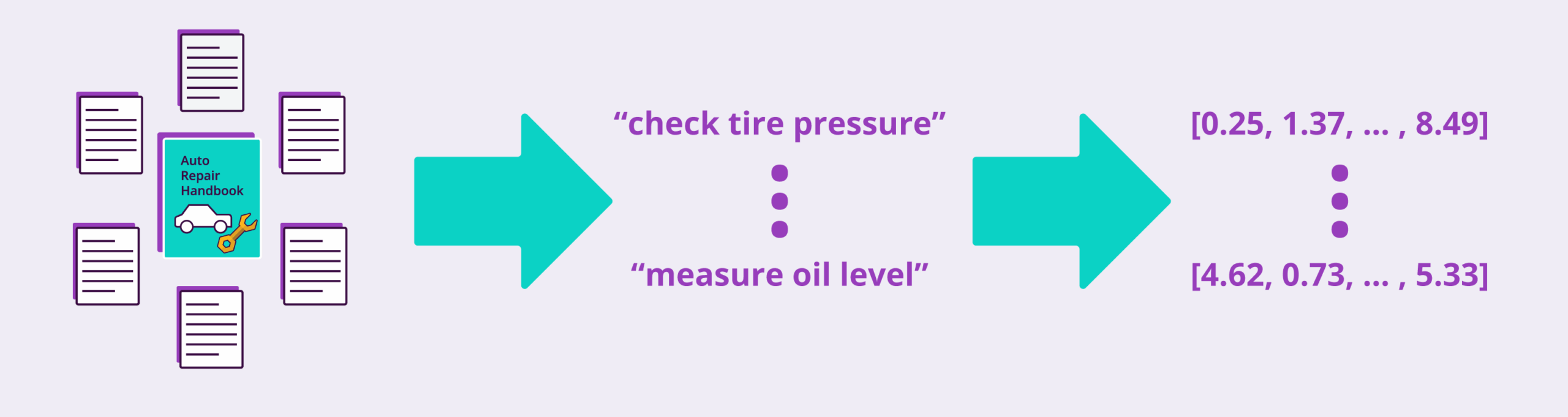

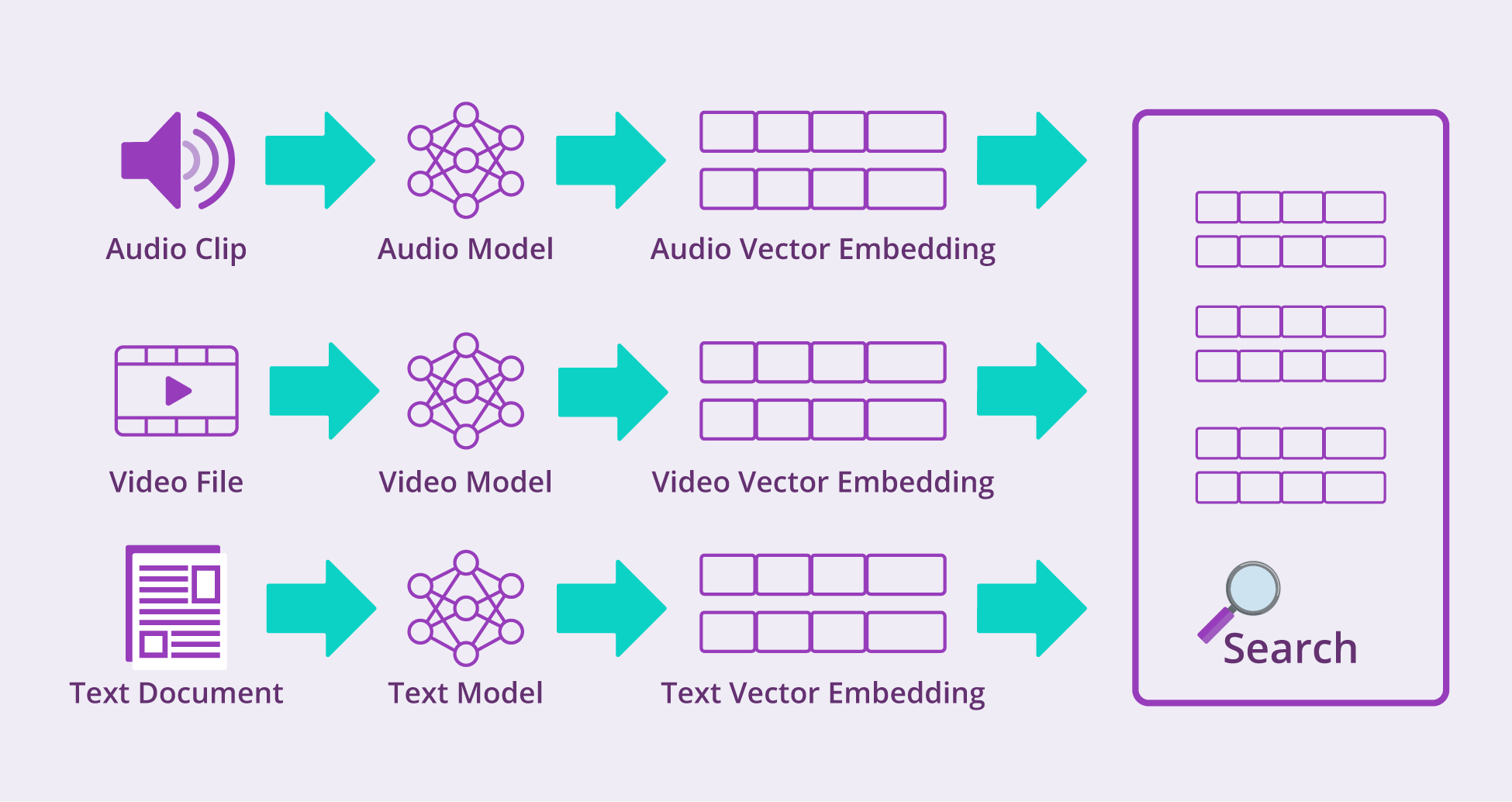

Information exists in various forms, from unstructured data like text documents, rich media, and audio to structured data like geospatial coordinates, tables, and graphs. AI advances have made it possible to encode all these data types into vectors using models or embeddings. These vectors represent data points in a high-dimensional space, capturing the meaning and context of each asset. By mapping relationships between data points, search tools can efficiently identify similar assets based on their proximity in this space.

Reduce search costs, improve results

Vector databases enable fast, low-latency similarity searches by storing and indexing vectors alongside metadata. With OpenSearch’s k-nearest neighbors (k-NN) functionality—powered by advanced indexing algorithms like Hierarchical Navigable Small Worlds (HNSW) and Inverted File (IVF) System—you can efficiently run high-performance queries on vectorized data.

Beyond search, vector databases enhance k-NN with robust data management, fault tolerance, resource access controls, and powerful query engines, providing a strong foundation for AI-driven applications.

Trusted in production

Power AI applications on a mature search and analytics engine trusted in production by tens of thousands of users.

Proven at scale

Build stable applications with a data platform proven to scale to tens of billions of vectors, with low latency and high availability.

Open and flexible

Choose open-source tools and leverage integrations with popular open frameworks, with the option of using managed services from major cloud providers.

Build for the future

Future-proof your AI applications with vector, lexical, and hybrid search,

analytics, and observability—all in one suite.

Key features

k-NN search

Use low-latency queries to discover assets by degree of similarity through k-nearest neighbors (k-NN) functionality.

Vector quantization support

Improve performance and cost by reducing your index size and query latency with minimal impact on recall.

Intelligent filtering

Apply intelligent strategies to optimize recall and latency for vector search.

Open and extensible

Build reliable, scalable solutions that operationalize embeddings and incorporate vector search functionality with an integrated Apache 2.0-licensed vector database.

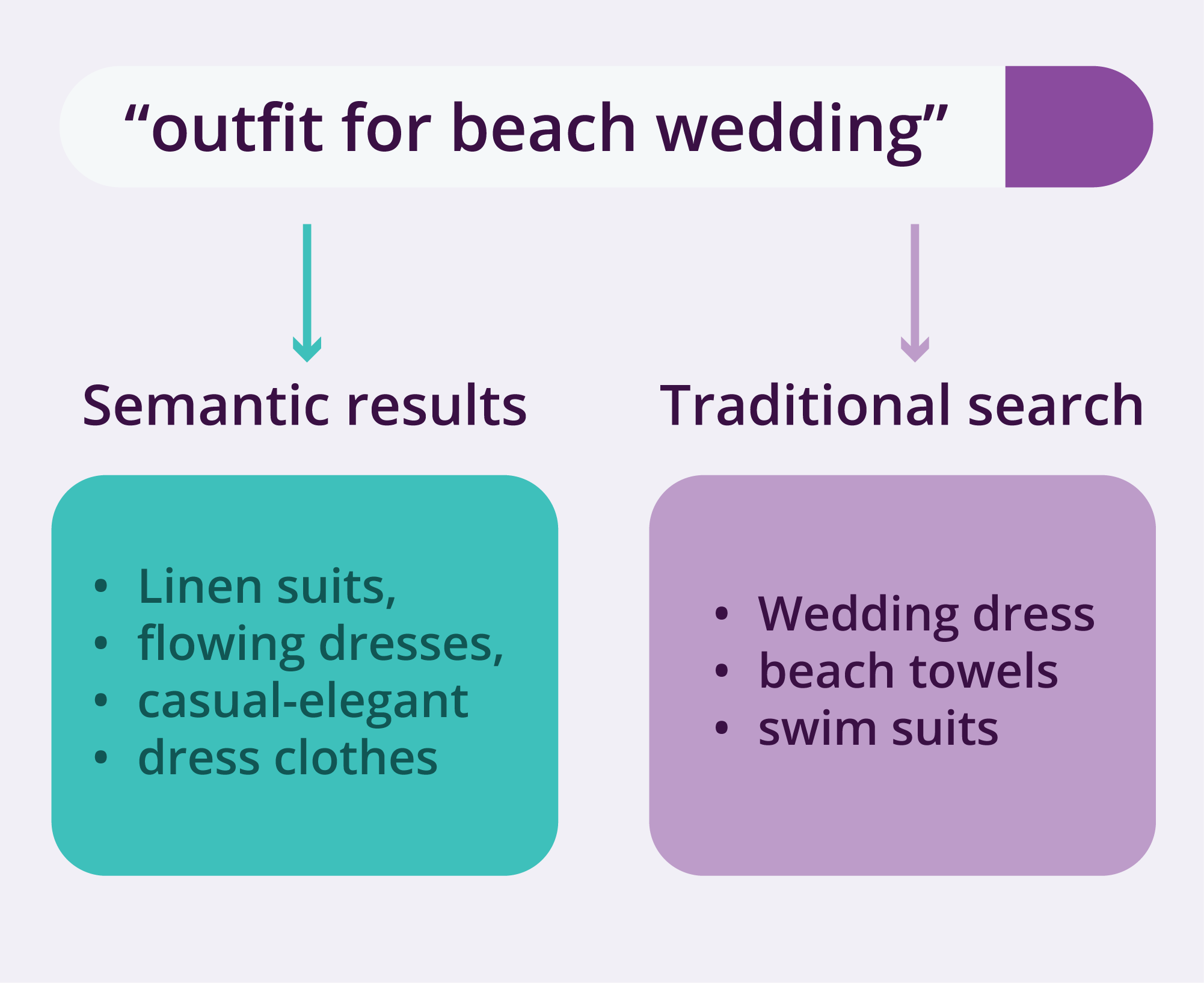

Semantic search

Improve accuracy and relevance for human language queries through searches that consider context and relationships.

Hybrid search

Combine keyword-based search with semantic, dense vector search to improve search relevance, tune search relevance by combining and normalizing query relevance scores.

Built-in anomaly detection

Automatically detect unusual behavior in your data in near real time using the Random Cut Forest (RCF) algorithm.

Memory-optimized search

Optimize Faiss performance by memory-mapping indexes and using the OS cache, eliminating full memory loads and reducing I/O.

Production-ready GPU acceleration

Accelerate vector index builds in OpenSearch with GPU support, dramatically slashing build times and costs for high-scale search workloads.

Use cases

OpenSearch Vector Engine’s vector database supports a range of applications. The following are just a few examples of solutions you can build.

Search

Visual search

Create applications that allow users to take a photograph and search for similar images without having to manually tag images.

Semantic search

Enhance search relevancy by powering vector search with text embedding models that capture semantic meaning and use hybrid scoring to blend term frequency models (Okapi BM25) for improved results. To learn more, see Semantic search.

Multimodal search

Use state-of-the-art models that can fuse and encode text, image, and audio inputs to generate more accurate digital fingerprints of rich media and enable more relevant search and insights. To learn more, see Multimodal search.

Generative AI agents

Build intelligent agents powered by generative AI while minimizing hallucinations. Use OpenSearch to enhance retrieval-augmented generation (RAG) workflows with large language models (LLMs) for more accurate and context-aware responses. To learn more, see Generative AI agents.

Personalization

Recommendation engine

Generate product and user embeddings using collaborative filtering techniques and use OpenSearch to power your recommendation engine. Enhance customer experiences by providing relevant repeat purchase, try new, and cold start recommendations.

User-level content targeting

Personalize web pages by using OpenSearch to retrieve content ranked by user propensities using embeddings trained on user interactions.

Related products

Deliver personalized shopping experiences by suggesting similar products based on a specific item, and guide customers to relevant products based on their browsing history or past purchases, powering “You might like this because you bought/viewed/clicked on this” recommendations. OpenSearch Vector Engine generates rich item embeddings optimized for predicting which products are likely to be purchased together, enhancing engagement and conversion rates.

Personalized product search

Improve product ranking by combining traditional text-based search algorithms like Okapi BM25 with vector search capabilities. OpenSearch Vector Engine draws on historical business data to optimize search rankings, going beyond simple language similarities to deliver highly personalized results, boosting relevance and increasing the likelihood of conversion.

Sales, marketing and finance

Churn prediction

Optimize growth by predicting customer churn, identifying users at risk of unsubscribing or stopping purchases. Take proactive steps, such as personalized notifications, discounts, or special offers, to re-engage and retain these users. With OpenSearch Vector Engine, you can build powerful models to effectively predict and prevent churn, driving long-term customer loyalty.

Customer LTV prediction

Predict customer lifetime value (LTV) to optimize marketing, strengthen customer relationships, and maximize profitability. Forecast future revenue, refine strategies and allocate resources more efficiently, focusing on high-value customers. With OpenSearch Vector Engine, you can build accurate models to predict LTV and drive smarter decision-making.

Email personalization

Increase email engagement with personalized content that drives active user counts and order rates. OpenSearch Vector Engine enables fast deployment of advanced recommendation models, enhancing email campaigns with tailored suggestions that deliver measurable business results.

Industrial and manufacturing

Predictive maintenance

Analyze time-series and sensor data to predict when equipment needs repair or servicing, preventing costly downtime and repairs. By storing sensor data as vector data and using ML algorithms, OpenSearch Vector Engine helps analyze equipment data to predict potential failures before they happen, enabling timely

maintenance and avoiding expensive breakdowns.

Quality control

Surface defects in real-time during the manufacturing process to ensure product quality and reduce waste. OpenSearch Vector Databases’ built-in anomaly detection capabilities help identify issues as they occur, enabling immediate action to maintain high standards and improve efficiency.

Fraud detection

Tackle a wide range of fraud and abuse challenges, from money laundering and credit card fraud to insurance and return fraud. OpenSearch Vector Engine’s built-in anomaly detection capabilities help organizations identify suspicious activity with precision and efficiency, enhancing security and reducing risk.

Getting started

You can get started with OpenSearch Vector Engine by viewing our getting started guide to the right, or by exploring our vector search documentation. To learn more or to start a discussion, join our public Slack channel, check out our user forum, and follow our blog for the latest on OpenSearch tools and features.