OpenSearch 2.11 introduced neural sparse search—a new efficient method of semantic retrieval. In this blog post, you’ll learn about using sparse encoders for semantic search. You’ll find that neural sparse search reduces costs, performs faster, and improves search relevance. We’re excited to share benchmarking results and show how neural sparse search outperforms other search methods. You can even try it out by building your own search engine in just five steps. To skip straight to the results, see Benchmarking results.

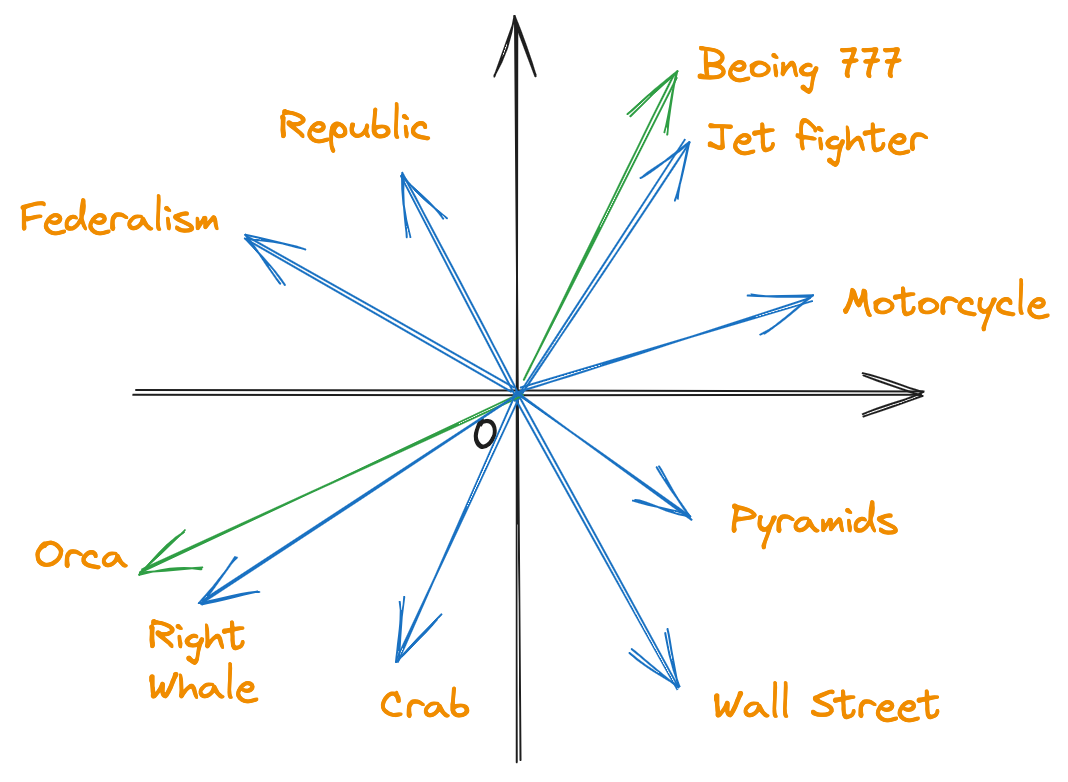

When you use a transformer-based encoder, such as BERT, to generate traditional dense vector embeddings, the encoder translates each word into a vector. Collectively, these vectors make up a semantic vector space. In this space, the closer the vectors are, the more similar the words are in meaning.

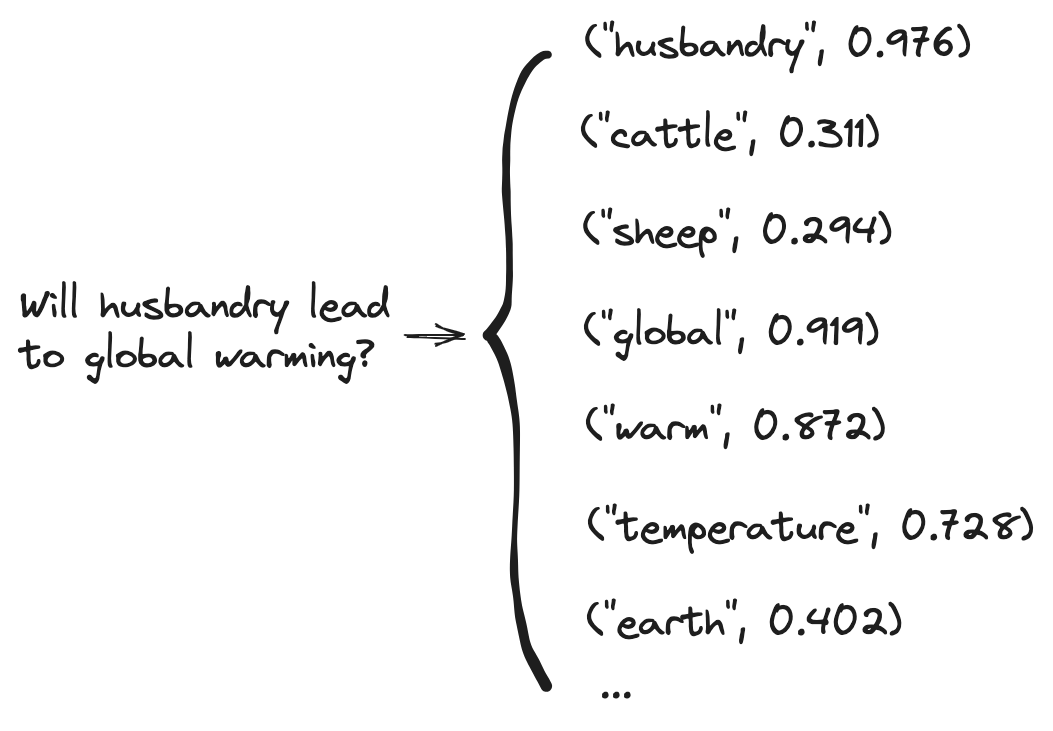

In sparse encoding, the encoder uses the text to create a list of tokens that have similar semantic meaning. The model vocabulary (WordPiece) contains most commonly used words along with various tense endings (for example, -ed and -ing) and suffixes (for example, -ate and -ion). You can think of the vocabulary as a semantic space where each document is a sparse vector.

The following images show example results of dense and sparse encoding.

|

|

Left: Dense vector semantic space. Right: Sparse vector semantic space.

-

In dense encoding, documents are represented as high-dimensional vectors. To search these documents, you need to use a k-NN index as an underlying data structure. In contrast, sparse search can use a native Lucene index because sparse encodings are similar to term vectors used by keyword-based matching.

Compared to k-NN indexes, sparse embeddings have the following cost-reducing advantages:

- Much smaller index size

- Reduced runtime RAM cost

- Lower computational cost

For a detailed comparison, see Table II.

In our previous blog post, we mentioned that searching with dense embeddings presents challenges when encoders encounter unfamiliar content. When an encoder trained on one dataset is used on a different dataset, the encoder often produces unpredictable embeddings, resulting in poor search result relevance.

Often, BM25 performs better than dense encoders on BEIR datasets that incorporate strong domain knowledge. In these cases, sparse encoders can fall back on keyword-based matching, ensuring that their search results are no worse than those produced by BM25. For a comparison of search result relevance benchmarks, see Table I.

You can run a neural sparse search in two modes: bi-encoder and document-only.

In bi-encoder mode, both documents and search queries are passed through deep encoders. In document-only mode, documents are still passed through deep encoders, but search queries are instead tokenized. In this mode, document encoders are trained to learn more synonym association in order to increase recall. By eliminating the online inference phase, you can save computational resources and significantly reduce latency. For benchmarks, compare the

Neural sparse document-onlycolumn with the other columns in Table II.For benchmarking, we used a cluster containing 3

r5.8xlargedata nodes and 1r5.12xlargeleader/machine learning (ML) node. We measured search relevance for all evaluated search methods in terms of NCDG@10. Additionally, we compared the runtime speed and the resource cost of each method.Here are the key takeaways:

- Both modes provide the highest relevance on the BEIR and Amazon ESCI datasets.

- Without online inference, the search latency of document-only mode is comparable to BM25.

- Sparse encoding results in a much smaller index size than dense encoding. A document-only sparse encoder generates an index that is 10.4% of the size of a dense encoding index. For a bi-encoder, the index size is 7.2% of the size of a dense encoding index.

- Dense encoding uses k-NN retrieval and incurs a 7.9% increase in RAM cost at search time. Neural sparse search uses a native Lucene index, so the RAM cost does not increase at search time.

The benchmarking results are presented in the following tables.

BM25 Dense (with TAS-B model) Hybrid (Dense + BM25) Neural sparse search bi-encoder Neural sparse search document-only Dataset NDCG Rank NDCG Rank NDCG Rank NDCG Rank NDCG Rank Trec-Covid 0.688 4 0.481 5 0.698 3 0.771 1 0.707 2 NFCorpus 0.327 4 0.319 5 0.335 3 0.36 1 0.352 2 NQ 0.326 5 0.463 3 0.418 4 0.553 1 0.521 2 HotpotQA 0.602 4 0.579 5 0.636 3 0.697 1 0.677 2 FiQA 0.254 5 0.3 4 0.322 3 0.376 1 0.344 2 ArguAna 0.472 2 0.427 4 0.378 5 0.508 1 0.461 3 Touche 0.347 1 0.162 5 0.313 2 0.278 4 0.294 3 DBPedia 0.287 5 0.383 4 0.387 3 0.447 1 0.412 2 SciDocs 0.165 2 0.149 5 0.174 1 0.164 3 0.154 4 FEVER 0.649 5 0.697 4 0.77 2 0.821 1 0.743 3 Climate FEVER 0.186 5 0.228 3 0.251 2 0.263 1 0.202 4 SciFact 0.69 3 0.643 5 0.672 4 0.723 1 0.716 2 Quora 0.789 4 0.835 3 0.864 1 0.856 2 0.788 5 Amazon ESCI 0.081 3 0.071 5 0.086 2 0.077 4 0.095 1 Average 0.419 3.71 0.41 4.29 0.45 2.71 0.492 1.64 0.462 2.64 * For more information about Benchmarking Information Retrieval (BEIR), see the BEIR GitHub page.

BM25 Dense (with TAS-B model) Neural sparse search bi-encoder Neural sparse search document-only P50 latency (ms) 8 ms 56.6 ms 176.3 ms 10.2ms P90 latency (ms) 12.4 ms 71.12 ms 267.3 ms 15.2ms P99 Latency (ms) 18.9 ms 86.8 ms 383.5 ms 22ms Max throughput (op/s) 2215.8 op/s 318.5 op/s 107.4 op/s 1797.9 op/s Mean throughput (op/s) 2214.6 op/s 298.2 op/s 106.3 op/s 1790.2 op/s * We tested latency on a subset of MS MARCO v2 containing 1M documents in total. To obtain latency data, we used 20 clients to loop search requests.

BM25 Dense (with TAS-B model) Neural sparse search bi-encoder Neural sparse search document-only Index size 1 GB 65.4 GB 4.7 GB 6.8 GB RAM usage 480.74 GB 675.36 GB 480.64 GB 494.25 GB Runtime RAM delta +0.01 GB +53.34 GB +0.06 GB +0.03 GB * We performed this experiment using the full MS MARCO v2 dataset, containing 8.8M passages. For all methods, we excluded the

_sourcefields and force merged the index before measuring index size. We set the heap size of the OpenSearch JVM to half of the node RAM, so an empty OpenSearch cluster still consumed close to 480 GB of memory.Follow these steps to build your search engine:

-

Prerequisites: For this simple setup, update the following cluster settings:

PUT /_cluster/settings { "transient": { "plugins.ml_commons.only_run_on_ml_node": false, "plugins.ml_commons.native_memory_threshold": 99 } }

For more information about ML-related cluster settings, see ML Commons cluster settings.

-

Deploy encoders: The ML Commons plugin supports deploying pretrained models using a URL. For this example, you’ll deploy the

opensearch-neural-sparse-encodingencoder:POST /_plugins/_ml/models/_register?deploy=true { "name": "amazon/neural-sparse/opensearch-neural-sparse-encoding-v1", "version": "1.0.1", "model_format": "TORCH_SCRIPT" }

OpenSearch responds with a

task_id:{ "task_id": "<task_id>", "status": "CREATED" }Use the

task_idto check the status of the task:GET /_plugins/_ml/tasks/<task_id>Once the task is complete, the task state changes to

COMPLETEDand OpenSearch returns themodel_idfor the deployed model:{ "model_id": "<model_id>", "task_type": "REGISTER_MODEL", "function_name": "SPARSE_ENCODING", "state": "COMPLETED", "worker_node": [ "wubXZX7xTIC7RW2z8nzhzw" ], "create_time": 1701390988405, "last_update_time": 1701390993724, "is_async": true } -

Set up ingestion: In OpenSearch, a

sparse_encodingingest processor encodes documents into sparse vectors before indexing them. Create an ingest pipeline as follows:PUT /_ingest/pipeline/neural-sparse-pipeline { "description": "An example neural sparse encoding pipeline", "processors" : [ { "sparse_encoding": { "model_id": "<model_id>", "field_map": { "passage_text": "passage_embedding" } } } ] }

-

Set up index mapping: Neural search uses the

rank_featuresfield type to store token weights when documents are indexed. The index will use the ingest pipeline you created to generate text embeddings. Create the index as follows:PUT /my-neural-sparse-index { "settings": { "default_pipeline": "neural-sparse-pipeline" }, "mappings": { "properties": { "passage_embedding": { "type": "rank_features" }, "passage_text": { "type": "text" } } } }

-

Ingest documents using the ingest pipeline: After creating the index, you can ingest documents into it. When you index a text field, the ingest processor converts text into a vector embedding and stores it in the

passage_embeddingfield specified in the processor:PUT /my-neural-sparse-index/_doc/ { "passage_text": "Hello world" }

Try your engine with a query clause

Congratulations! You’ve now created your own semantic search engine based on sparse encoders. To try a sample query, invoke the

_searchendpoint using theneural_sparsequery:GET /my-neural-sparse-index/_search/ { "query": { "neural_sparse": { "passage_embedding": { "query_text": "Hello world a b", "model_id": "<model_id>" } } } }

The

neural_sparsequery supports three parameters:query_text(String): The query text from which to generate sparse vector embeddings.model_id(String): The ID of the model that is used to generate tokens and weights from the query text. A sparse encoding model will expand the tokens from query text, while the tokenizer model will only tokenize the query text itself.query_tokens(Map<String, Float>): The query tokens, sometimes referred to as sparse vector embeddings. Similarly to dense semantic retrieval, you can use raw sparse vectors generated by neural models or tokenizers to perform a semantic search query. Use either thequery_textoption for raw field vectors or thequery_tokensoption for sparse vectors. Must be provided in order for theneural_sparsequery to operate.

OpenSearch provides several pretrained encoder models that you can use out of the box without fine-tuning. For a list of sparse encoding models provided by OpenSearch, see Sparse encoding models. We have also released the models in Hugging Face model hub.

Use the following recommendations to select a sparse encoding model:

-

For bi-encoder mode, we recommend using the

opensearch-neural-sparse-encoding-v2-distillpretrained model. For this model, both online search and offline ingestion share the same model file. -

For document-only mode, we recommended using the

opensearch-neural-sparse-encoding-doc-v3-distillpretrained model for ingestion and theopensearch-neural-sparse-tokenizer-v1model at search time to implement online query tokenization. This model does not employ model inference and only translates the query into tokens.

- For more information about neural sparse search, see Neural sparse search.

- For an end-to-end neural search tutorial, see Neural search tutorial.

- For a list of all search methods OpenSearch supports, see Search methods.

- Provide your feedback on the OpenSearch Forum.

Read more about neural sparse search: